Imagine you generated an amazing ai-image. Now you ask yourself, “Are Stable Diffusion Images Unique? Can someone else generate the exact same image as me?“

It’s a valid concern.

And in this article, I will try to answer that question.

What Determines a Unique Image?

In short, every image you generate with Stable Diffusion is unique. However, the difference between each image can be from dramatic to barely noticeable.

The generations from above are unique in some parts of the image but overall extremely similar. If you still consider them unique, then every Stable Diffusion ai-generated image is unique.

However, if you are like most of us, this result won’t be satisfying.

So what does it take to make a completely unique image?

If you haven’t done so yet, you can learn how to install Stable Diffusion here.

- If you prefer watching instead, here’s my video on the same subject:

The Chances of Getting The Same Image

The Chances of generating the same ai-image in Stable Diffusion as a different person are highly unlikely.

The reason is quite simple – variables.

To generate an ai image, you have to make some choices:

- Checkpoint

- Prompt

- Negative prompt

- Sampling steps

- Sampling method

- Width and height

- CFG Scale

- Highres. fix?

- Denoising strength

- Highres steps

- Seed

- Clip skip

- Extra Networks (like Lora or Textual Inversion)

- ControlNet

And that’s not including the post-processing like upscaling, inpainting, outpainting or img2img.

By having so many variables with multiple choices within each, you can only imagine the likelihood of someone choosing all of the exact same options as you.

It’s zero to none.

That being said, if you opt to use a specially trained checkpoint or Lora, they often produce similar style images, similar faces, etc.

Or, if you use a specific artist in your prompt, for example, “…art by alphonse mucha”, then the stylistic influence will potentially make your ai-generation similar to that of another person.

Read more about how Stable Diffusion generates images here.

Testing Stable Diffusion Variables

Let’s run a few quick tests on the effects each variable has on the final outcome.

Hopefully, this will help us understand what we should pay attention to the most and customize, as well as the likelihood of generating a not unique image.

For all of the tests below, every setting and variable is the same, except for the one we are testing.

By the way, if you are looking for inspiration, check out more than 40+ things you can use Stable Diffusion for.

Checkpoint

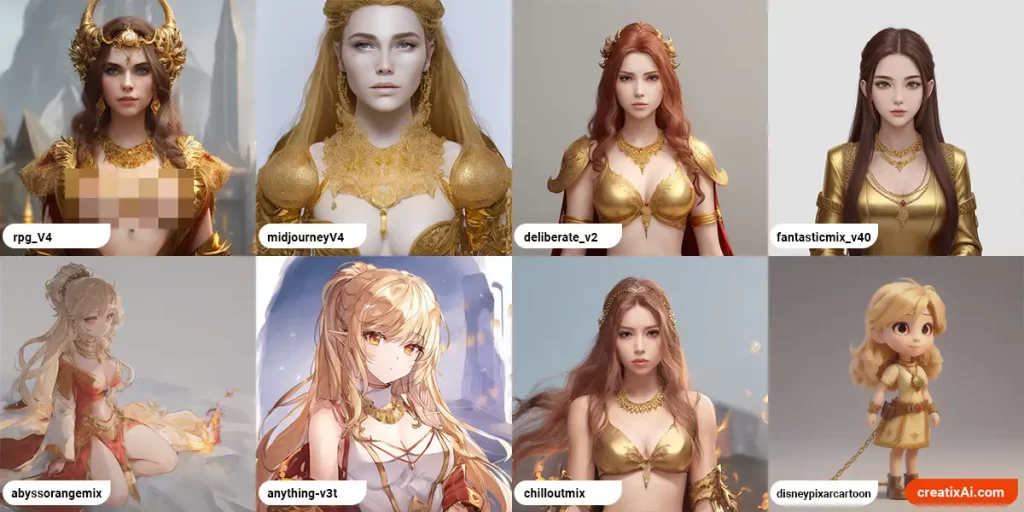

Let’s begin with testing different checkpoints. By the way, you can download checkpoints from civitai.com.

Prompt: young female fire witch, wearing a gold necklace. (medium shot:1.3), intricate metal, fire flames. best quality, masterpiece, highly detailed, concept artNegative: (close-up:1.3), bad art, low quality, mutated, watermark, signature, extra limbs, undersaturated, low resolution, disfigured, deformations, text

Based on these specific checkpoints, all of the returned images are unique. Although deliberate and chilloutmix have a similar composition, hair and top clothing – the 2 images still look quite distinctive.

You might get different results from testing models that were trained on similar image datasets or that were merged together to create a new model.

Also, certain features and styles will look very similar despite your prompts in some models.

Verdict: 8/10

The same prompt with a different model will most likely create a completely unique image. However, a different prompt on one model that was trained on a limited dataset may produce similar results.

Prompt

To test the prompt, we will look into removing and adding words, using synonyms, emphasis, artists and word order. (left to right)

- young female fire witch… (the original prompt)

youngfemale fire witch… (removing a word)- young female fire witch, magical, wearing a gold necklace… (adding a word)

- youthful female fire witch, wearing a gold necklace… (using a synonym)

- young female fire sorceress, wearing a gold necklace… (using a synonym)

- young female (fire witch:1.2), wearing a gold necklace… (emphasis)

- art by Michelangelo, young female fire witch… (art styles)

- wearing a gold necklace, young female fire witch… (changing order)

There are so many different modifiers you can use in your prompt: styles, medium, the color of specific objects, lighting, clothing, emotions, postures, etc.

I’ve only tested one change at a time at the start of a prompt, but there are many words and possibilities for change.

While the final generated images look similar, I wanted to show you how big of an impact each word makes on a single image.

Verdict: 10/10

Every word in your prompt affects the generated image. Unless you are copying prompts from someone else, chances are – your ideas are unique, and so will be the result.

Negative Prompt

What goes in the negative prompt has a big impact on the final image also. Make sure you read this part as well before copying a prompt from someone else.

For this example, let’s do a different prompt:

3D sculpture of an orc face, orc bust, (best quality, masterpiece), 3D, blender render, 8k, ultra-detailed, simple backgroundHere are the negative prompts (left to right):

- Empty

- Green

- Lowres

- Realistic

- Cartoony

- Depth of field

- Deformed

- Text, lowres, cartoony, depth of field, deformed

Including just one word has a meaningful impact. So if you are looking for a realistic photo, including words in the negative prompt like “cartoony, art, painting, stylized” should help.

Verdict: 9/10

You can judge for yourself which version you like most, but the fact keeps. A single word in the negative prompt will affect your generation.

Sampling Steps

Oftentimes people use between 20-50 sampling steps. Let’s test the sampling steps on Euler a from 10 to 80.

As you can see from the image above, the generations are somewhat unique. The most visible difference is between values 10 and 70, with 70 to 80 being the least changes.

What remains unchanged is the overall approximate positioning and color palette of the elements.

The moon goes from blurry (10) to detailed and defined (80). The kid also looses the simplified stylization and gains more and more detail.

Verdict: 4/10

Sampling steps do have an effect on the overall image. But, if everything else stays the same – the images are too similar to be considered truly unique.

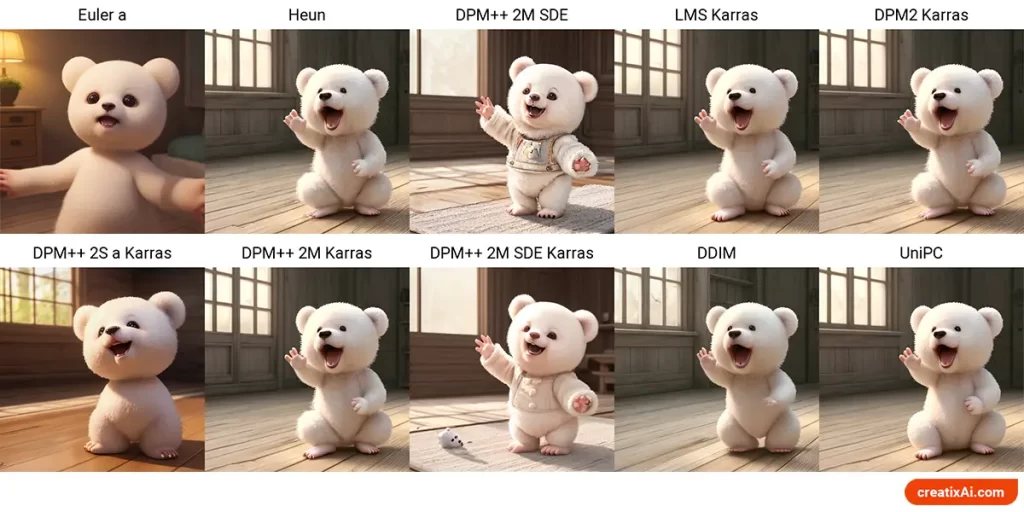

Sampling Method

There are many sampling methods available. For this test, I will only use 10 of them.

Verdict: 2/10

As you can see, some samplers provide the same images, while others make completely unique ones. Still, most users use the most popular samplers, and so will you, probably. So focusing on the sampler for uniqueness doesn’t help much.

Width and Height

Changing the size from 512×512 to 576×576 keeps the same ratio, but the generated image will look completely different!

Verdict: 10/10

Changing width and/or height parameters will greatly affect the final image. Something to keep in mind.

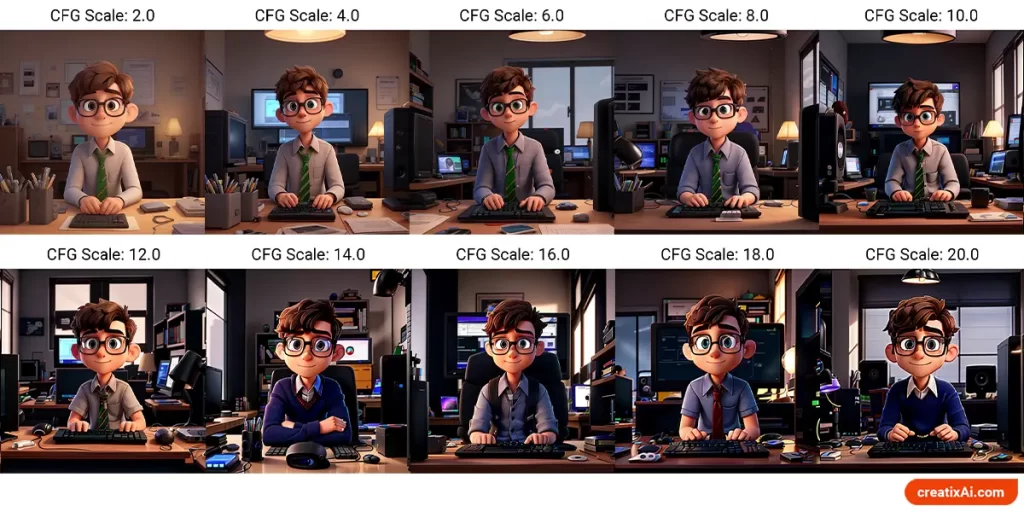

CFG Scale

CFG scale controls how strongly the image should follow the prompt. Oftentimes users go for a number around 7.

As you can see, anything above 10 is starting to look less appealing but more specific. It’s like putting Ai into a box of specificity, where it doesn’t have the freedom to add its creativity to the mix.

Verdict: 5/10

In the smaller numbers 1-10, the CFG scale affects the final uniqueness of the ai-image. Anything above that, and users probably won’t be using the numbers as much because of its impact on the appeal of the final art.

Highres. Fix

I always suggest using highres. fix in the beginning stages when you are just checking out each seed, as well as later on. The truth is if you like an image without highres. fix – if you decide to add it later, the image will change significantly.

Testing:

- no Highres. fix; (original image) — with it at 1.1 — with it at 2;

- denoising strength 0.4 — 0.8; (0.6 in original)

- Hires steps 10 — 50 (original is the same steps)

- Changing height from 512 to 648 in highres

There are also different upscalers, but we won’t be looking into that today.

Verdict: 5/10

While the images above look similar in composition and colors, they are also distinct in detail.

When it comes to image uniqueness, this is not the most important variable. But, when talking about image quality – it certainly is.

Seed

The seed number looks something like this: “1224291933”. Below, I changed one random number from the original seed to a different number.

Verdict: 10/10

The seed strongly affects the look of your image. It should be at the top of your priority list.

If you are copying someone else’s prompt and settings, play with the seed to get a completely unique image.

Clip Skip

Clip skip is a very advanced feature you must enable in the settings if you want to use it. Clip skip works better on some models than others and changes the look of the final image.

Verdict: 8/10

Clip Skip definitely affects the final composition and look of the final ai-generated image. Though, you might not be able to use it on all checkpoints.

Extra Networks

Extra networks such as Lora, hypernetworks or textual inversion are often used to achieve a specific feature, such as a style, pose, or a person’s likeness.

What I’m curious about is: if you use an unrelated Lora, will it change the image enough to be unique without taking over my desired outcome?

First, I tested different Lora densities with a completely unrelated to my prompt Lora – Ariel (The Little Mermaid).

Prompt: Human body with a rabbit head, Therianthrope, wearing an orange hoodie. at night, night city lights, vibrant colors. digital painting, trending on artstation, masterpiece, highres, cinematic, dramatic lighting(Left to right): original, Lora at 0.1; 0.2; 0.3; 0.5; 0.6; 0.7; 0.9.

The outcome is surprisingly good, no mermaids are floating around, and the concept remains. Also, the images look unique, especially at the higher Lora values.

I kind of like the result at 0.3 Lora, so I will test with some more unrelated Loras at this value to see the final outcomes.

I used a different Lora for each image above, most completely unrelated to anything in this image, including style. Most of them followed the prompt, apart from the last two.

As you can clearly see – external networks CAN be used to create even more unique images while following your original concept.

What if instead of adding one Lora at 0.3, you added 2 or 3? Even more custom work will be generated.

Verdict: 9/10

Depending on the Lora you chose (and it doesn’t have to be relevant), you might get slightly to extremely unique results. Adding more than one only enhances this effect.

What are the chances that all the variables you use will be the same with the next person? Zero to none. Add Lora to the mix, and your image will surely be unique.

“Are Stable Diffusion Images Unique?” My Answer:

Stable Diffusion ai-generated images are always unique, although some factors influence by how much.

Based on the tests above and my personal experience, here is how much each factor influences the uniqueness of your ai-generated image:

- Prompt 10/10

- Seed 10/10

- Image size 10/10

- Negative prompt 9/10

- Extra Networks: 9/10

- Checkpoint 8/10

- Clip Skip 8/10

- Highres. fix 5/10

- CFG scale 5/10

- Sampling steps 4/10

- Sampling method 2/10

Given that there are so many factors that influence each generation, your chances of generating a very similar image to someone else’s are not high at all.

Although, as I mentioned previously, if you use a checkpoint trained on only certain types of faces, for example, then the faces in your generation will be similar to someone else’s. So, pay attention to that.

Also, if you like someone’s generation and want to use their prompt and settings, make sure to adjust some of the high-effect variables to get a unique image.

There’s nothing to worry about, have fun creating!